Continuous Integration (CI) is one of the most popular concepts nowadays in software engineering. CI defines how to bring your artifacts from your sources. In this issue, I’ll explain how The Ultimate Artifact is created for The Ultimate Commit through The Ultimate CI Pipeline.

My experience is primarily based on Gitlab CI, so I might miss some new useful concepts in other CI tools as well as I might use unfamiliar wording for you, but I believe the key things are 100% transferable.

Overview

As described in The Ultimate Artifact post, I distinguish 3 types of artifacts: Library, Application, and Template (if you missed that post, I strongly recommend you to review it first). They all need different types of CI pipelines. Let’s declare the critical stages of continuous integration presented for almost every type:

analyzing - check the code statically: run linters (eslint, ktlint, yamllint, etc.), run code analysis tools like sonar qube

compiling - compile sources to their final form, for example, .java (source code in java) files to .class files (bytecode to run on JVM). Transpilation (source code→ source code) is a form of compilation, so ts → js is here as well. Documentation generation is here as well (.adoc → .pdf).

testing - test compiled code (unit and integration tests, for example, with db)

building - pack compiled code (.class → .jar, .js → .tar.gz), pack documentation, etc.

releasing - mark sources as “released” with a tag if the pipeline is running on the default branch, also publishes built artifacts to their artifact storage (if there is something to publish).

The Ultimate CI Pipeline defines these stages are strongly sequential. This strictness gives you the following guarantees:

you never compile poor quality sources accidentally because they are statically analyzed first

you never test not analyzed sources, so you don’t see not existing errors related to wrongly formatted code (for example, indentations in python)

all builds have acceptable quality (it means that if it is acceptable for development to skip tests for a particular case, the Ultimate CI Pipeline doesn’t block you)

you never make accessible (publish) artifacts that were not built because of poor quality

all your artifacts satisfy your requirements formulated with tests (if you do not manually ignore ones as an exclusion)

Different types of artifacts have some diversities among their pipelines. Different stages might also have a variety of steps/jobs/tasks.

Pipeline Types

I would like to logically classify ci pipelines because even if, in general, they look similar, some details might differ. The classification is based on The Ultimate Artifact classification presented in the last issue.

Template Pipeline

This might be obvious, but templates usually do not have testing, building, publishing stages because sources provide all the needed value. So, practically, they only need the following:

analyzing - check that a template is following common practices, style is correct, etc.

releasing - mark a template as released (with tag)

In some cases, if it is necessary from a delivery perspective, the template might be packed, for example, to a tarball (in the building stage) and delivered to template storage (as an extended “releasing” stage). For such cases, it is better to consider the library pipeline.

Another example - Gitlab CI templates. They don’t need to be published somewhere; only tagging is needed to point to a predictable template state.

The notification about the newly released template version might be sent on releasing stage as an extra step.

Library Pipeline

The library pipeline fully leverages described pipeline stages. If many libraries are built in a mono repo, they all might be published. Let’s review the stages from the Library Pipeline perspective.

All the stages described above are relevant, but what is important: here, we have to consider where to publish the artifacts. It often makes sense to separate production and development storages. For example, Maven separates release repositories (static artifact versions for production) and snapshot repositories (dynamic & overridable versions for development).

Another important point is that sometimes, we cannot assign aliases to libraries (technical constraint). We expect the library to be republished with the release version even if the code is unchanged.

Application Pipeline

The application pipeline does also require all stages. We also should analyze sources, compile them. For interpreted language, this step is not necessary, but some sources might be used for code generation. For example, request handler stubs might be generated from API schemas. Testing might include integration testing because apps might have connections with other components, unlike most libraries.

Building depends on the option of how the app will launch. If this is an old-fashioned application server, the building might look like Library. If it is an Android mobile app, you build apk file. If the app will run on OS you build native binaries. But if we are talking about modern could native development, we build docker containers, and packaging is not just packing compiled sources to a form of archive, it is about layered image building, optimized by layer change frequency (though this is a matter of another post).

In the perfect world, I’d say that we should be able to create aliases for any kind of artifacts, but the most advanced approach here is to use docker tags. We mark sources on the release stage that triggers artifact building that will help access and identify the artifact. This Ultimate Artifact should be additionally tagged with the version that docker does seamlessly.

The most important difference here in respect to libraries is that a successfully finished CI process should do something to motivate CD to do its work. For example, the final CI step might be to update the app's current version in the repo responsible for the integration environment.

Considerations about Stages

Analyzing

Analysis helps you to identify source issues early. Because The Ultimate Commit motivates you to commit smaller logical pieces, it becomes hard to go too far from project conventions.

Security scanners are also a part of the analysis step, so you can block any pipeline with potential security issues if you desire.

The Ultimate Commits are already published at this stage, so it is relatively late to validate how they formed. I will describe how to establish The Ultimate Contribution in the next issue. Subscribe not to miss.

Compiling

It only makes sense to separate compilation if your build system supports output caching. It means that if you can run compilation and, after that, separately run tests without recompilation, the separated compilation (as an individual step) works.

Testing

Should we be able to disable this stage for the sake of development speed? In the perfect world - we shouldn’t, but the reality is not perfect. The Ultimate Development Experience is another topic but briefly. Testing is crucial, the longer we don’t have testing, the more unpredictable quality we have. If we are completely sure in every commit we minimize our stress. Why do we want to disable tests? The single reason I can imagine to make deployment in development faster and avoid test fixes until the end of the feature development. We might exchange better quality for development/maintenance experience, but testing & security will be impacted. I think it is better to look at solutions that just improve development experience. For cloud-native apps, you can consider the following options: local deployments, scaffold, telepresence.

Building

Here we have a similar story as for the compiling stage. We must reuse the results of the previous stages to pack in the form of artifact(s). Building performance might usually be improved with different caching strategies. We pack what we already compiled, if we properly slice docker images we might reuse layers with your framework and library. We might pack only changed parts of compiled code (relevant for monorepos, but I’m not a big fan of ones for microservices)

Releasing

Depending on a pipeline type Releasing might include both publishing and tagging or only tagging. The same story for notification. For example, if you want to release gitlab ci template, you don’t need to publish anything but rather only tag sources.

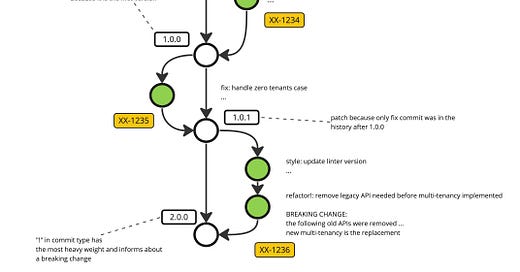

Auto-tagging with a version is another interesting advantage of The Ultimate CI Pipeline. Because The Ultimate Commit is a foundation for the pipeline, we could compute the next artifact version from git history. For example, tools like Semantic Release. and Commitizen will help you to compute the next version and publish it to your VCS server in the form of a tag or updated file. Let me briefly explain how it works:

Conclusion

The Ultimate CI Pipeline defines how to transform The Ultimate Commit to The Ultimate Artifact, at the same time, they are prerequisites for The Ultimate CI Pipeline. The unified approach with some benefits helps you to not care about CI at all. It is important to answer on the question: How to guarantee compliance with conventions on The Ultimate Commits because otherwise auto-versioning won’t work?

In the next issue, we define how to make The Ultimate Contribution, which includes The Ultimate Branch, The Ultimate Merge Request and The Ultimate Commit Push that help ensure conventions compliance. Subscribe not to miss