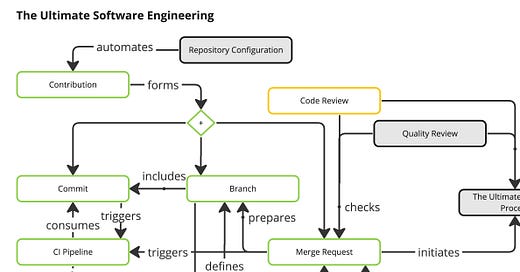

Code Review is a vital component of professional software engineering. This practice helps to find bugs early, educate and share knowledge. The Ultimate Contribution we discussed in the previous issue requires The Ultimate Code Review to make the contribution successful.

Code Review might include manual practices as well as automated ones. So we will talk about both. But before we start, let’s understand our motivation to think about ultimacy.

I provide a few examples of tools in the post, motivation is just my awareness about them and this is not sponsored. Despite of this fact analyse how suitable they are for you before using.

Motivation

Despite the rationale behind many of our decisions in IT, we still have cargo cults for some practices. So to start here, I would suggest discussing the real quantifiable answer to “Why do we need code review at all?”.

A few factors bring motivation for code review:

There are a few actors in code writing collaboration. Those who write some particular code, other ones who do not know anything about this code or at max partially know, those who wrote this code 6 months before, and others who will maintain this code.

Developer tenure in a company might be long, but high likely ~2 years on average.

Program comprehension takes about 50% of developer work.

Those three factors above tell us that we must collaborate and exchange knowledge to avoid expertise loss and team performance impact on time. The more people understand a particular code, the more sustainable it is.

Properties of The Ultimate Code Review

The Ultimate Code Review:

is based on The Ultimate Commit

is a part of The Ultimate Contribution

keeps a history of communication to share knowledge

guides a reviewer

focuses on business-logic and automates other aspects of the review

maintains a culture of collaboration and learning

Benefits of The Ultimate Code Review

The Ultimate Code review is a part of the Ultimate Contribution. It means that atomic commits come to the code review stage. So, one of the important benefits is the opportunity to review commits individually. Imagine you separate your feature development to feature / refactoring / ci changes. Three different commits can be reviewed separately or together depending on the size of the changed code base. That drastically simplifies the review process, but at the same time, separated commits review is an optional thing.

Another important aspect is that The Ultimate Artifact is built for commits in merge requests (a.k.a. pull requests). It means that reviewers see the results of the Ultimate CI pipeline and have a wider picture of code quality metrics. Seeing not covered code lines, a reviewer can recommend changes or detect potential bugs easier. We should remember that the presence of code coverage guarantees bug detection.

Every comment of the Ultimate Code Review is an opportunity to learn and share knowledge. The fact that we store all discussion results will help developers in many years to understand the logical path of how a particular change was introduced.

The Ultimate Code Review reduces navigation complexity and repeated activities by guiding a reviewer. So the reviewer can focus on the most important 400 LOC at a time. According to research at the end of the article, this should drastically improve the defect density rate.

The right mood in code review makes relationships in the team better and creates a space for collaboration and peer support.

Some practices that help to improve code are already automated, some of them can be automated, but others are purely manual. Let’s sort through them.

Automated practices

I believe Software Engineering must be more creative than routine. So we must automate all the things that we can be automated.

Code Quality

One of the important parts of code review that requires a ton of attention is code quality checks. Modern static code analysis tools help to understand what problems exist, let’s mention a few of them:

Language-specific linters will check guidelines compliance, for example, ktlint will help to check kotlin best practices, yamllint will validate the same way of indentation everywhere.

Static analysis tools like SonarQube will help to detect code smells and bugs, and others like Snyk will automate static security checks against known security issues.

Test coverage tools like JaCoCo in collaboration with Gitlab Code Coverage reports (via Cobertura adapters) embedded into your Merge Requests or comprehensive tools like Codecov will emphasize lines that might be important to review carefully (but not necessary)

Local git hooks will format your code before committing to minimize remote code updates. Technically, it is not a part of the review process but helps to avoid misunderstanding why proper formatting was not applied

AI Reviewer

I have not applied modern AI reviewers in practice yet and do not consider them a comprehensive replacement for reviewers, but at the same time, they should be great reviewer assistants. The reason is pretty simple, if you can detect a problem without human attention, do that! But you have to keep in mind two risk factors:

the most of AI review tools are cloud-based, which means that they upload your code somewhere, sometimes, models learn from your code to provide better results, so this is a potential attack vector and security breach.

the accuracy of outcomes <100% so you might see “false positives”.

The key difference between AI analyzers and code structure analyzers is accuracy. The modern AI models cannot guarantee 100% accuracy of their outcomes but can find things that are impossible to find with old-school code structure analyzers.

I heard about CodiumAI and CodeGuru (by AWS), it is just an example, not a recommendation, and you definitely will find more.

Review Tools

Can we send the code via email to review in the form of the archive? For sure! The key question is what is the effectiveness of this process. I saw a lot of tools that help you review your code, but the best foundation is IDE, with all the well-known tools you use for program comprehension. Fortunately, in the latest releases, Intellij IDEA team informed us about their native integration with gitlab which sounds like the first great step to simplify code review.

Manual practices

Strive to follow 4 eye principle

Even if you have all possible automation, ask someone to review your code. 4 eyes principle comes from risk management and means any important decision must be reviewed by someone else. There are possible automations to ensure that rule, for example, Gitlab allows to set a minimal set of reviewers.

The single reason to comment on style issues

If you comment on things like redundant blank lines, it means that you have wrongly configured / not configured the linter. Never spend your time on such things. It is extremely inefficient. But there is an exclusion - misconfiguration. Just ask the developer to update the linter config instead of fixing every occurrence of the issue

Choose the relevant reviewers

There are at least three options to chose relevant reviewers

reviewers are identified as code owners

you know that the change will implicitly impact someone

you need someone with expertise

Code Owners can be automatically assigned according to a dedicated file in the repository (Gitlab example)

Depending on the developer's experience in the project, reviewers should be chosen by their lead engineer or by them. The less experience developer has, the more important to help to choose the right people to review.

Resolve reviewer comments only with their permission

Three actions that a reviewer does:

leaves a comment

resolves their comment

approves

Sometimes comments might not be perfect, and misunderstandings might happen. So, I’d recommend using “Approval” as a sign that the reviewer is fine with the current state and any other changes, but if a developer understands that the MR (a.k.a. PR) should be reviewed again, they should request it explicitly. If a reviewer didn’t set an approval, you might consider that the reviewer wants to double-check some changes. If comments do not require a second review and are marked as optional, the developer can mark them as “resolved” themselves.

The Ultimate Approval Process is out of the scope of this post, so we discuss that topic in the next issue. Subscribe not to miss

Avoid long discussions

Sometimes people might start debates in code review format because of different opinions. If there are no objective reasons to change code immediately, postpone the discussion, schedule a meeting, or use your tech syncups to discuss in a wider quorum.

Focus on things that are hard to change

Some changes are pretty cheap to introduce (like code style), and others are really hard (API with external users). If you understand that it is not too expensive to change the decision later, consider postponing the discussion if it takes too much time. Try to follow “Delay Design Decisions until they are absolutely necessary” practice. But keep in mind that some decisions are important to discuss early (for example, API design, db schema, etc.)

Include a checklist for reviewers for routine activities

Reviewers should not care about routine activities. Just use a checklist that defines what is important to check in the context of your project. Review documentation for libraries? Exposed interfaces? Configured authorization? All the typical things that can be met in any feature should be formulated as a checklist. This is the only thing, except for automation, that can help almost to guarantee team rules satisfaction.

Create a template for MR description (a.k.a. PR)

A checklist is one of the other typical things for code review, so it is useful to preconfigure a template for MR that helps fill in all the details and contains everything you prefer not to forget.

Annotate code in places where you want to get more focus

If a developer invites someone with specific expertise to review, they should not forget to annotate important pieces (for example, with comments) to align the focus of a reviewer.

Breakdown and order pieces of code review

The Ultimate Commit includes Conventional Commits that define atomic commits. This is one of the most powerful tools to make reviews simpler. Depending on code review size, a reviewer can review all commits at once or slip through refactorings, style changes, and other things that might be considered unimportant. When a reviewer has a relatively small commit to review, they might simplify it even more and follow the right order, for example, following the recommendations of a developer or common sense.

Focus on business

This is pretty obvious, but the reviewer has to focus on the business side of changes and help to validate that the requirements were satisfied. If not, neither API nor db schema does make sense. One of the options to validate requirements is to start with tests that assert behaviors. BDD might be valuable for such things.

Focus on things that are hard to change

When requirements are satisfied, you can focus on the things that are hardest to change, for example:

Sync & Async APIs

DB schema

Integrations

Formulate a backlog for automation

Sometimes you detect something that might be checked automatically, but at the same time, implementation is too complex for the timings of a particular feature development. So, create a backlog for automation and solve it one by one, don’t waste your time commenting if the automation is presented in the backlog. Once the automation is implemented, all occurrences will be fixed.

A recommendation is not a dictation

Recommend changes, not dictate. Rules, not people, manage the best systems, so if you prefer to do something in a particular way and believe it is the best one, discuss it with the team outside the MR, and formulate rules. Otherwise, it will require explaining motivation in every new MR.

Explain motivation

Sometimes the motivation behind your proposal for changes might not be clear. A discipline of explanation makes your opinion argumented.

Propose options to solve

Suppose there are a few ways to introduce some changes. Formulate and propose them. Your comparison of variants will help a developer choose the most appropriate one or even propose a better one on top of your options. If you do not know how to improve and this requires investigation, inform the developer.

Discussions must be recorded in the form of a comment

Sometimes changes require verbal discussions. We usually do it in person or via tools like Zoom. Results of those discussions must be saved in text in the particular merge request to share results with others.

Conclusion

The described practices will help your team to reach the properties of The Ultimate Code Review. Subsequently, this will introduce the mood of a collaborative and support-focused vibe. The process will become simpler, and quality will be increased.

In the next issue, we will discuss The Ultimate Approval Process as a part of The Ultimate Contribution. Subscribe not to miss.

Other useful resources

Instead of the video of the week, I’d recommend looking at the following papers:

Expectations, Outcomes, and Challenges of Modern Code Review